- Lefthand San Serial Number Generator

- Serial Number Lookup

- Lefthand San Serial Numbers

- Kumpulan Serial Number Idm

Solution so that your DR solutions can rely on a LeftHand SAN and Riverbed Steelhead WDS appliances. CONFIGURATION Storage Site A and Site B both contain a three node LeftHand Networks’ NSM 160 cluster. A null modem serial cable and terminal session (19200,8N1NoFlow) is used for basic configuration of the NSMs. Enter up to twenty serial numbers in the fields below and click 'Submit'. Note: Additional time will be required to look up multiple serial numbers. Use iLO Amplifier Pack Product Entitlement Reports to check your contract and warranty status for iLO Amplifier Pack managed devices. Rheem Manufacturing ranks as the global leader in the manufacture of high-quality, sustainable, and innovative water heaters, tankless water heaters, air conditioners, furnaces, pool heaters, and HVAC systems for residential and commercial applications, and is a full member of AHRI, the Air-Conditioning, Heating, & Refrigeration Institute.

Disclaimer: This article contains references to the words master and slave. I recognize these as exclusionary words. The words are used in this article for consistency because it’s currently the words that appear in the software, in the UI, and in the log files. When the software is updated to remove the words, this article will be updated to be in alignment.

Please note that this article has been written in 2009. I do not know if Lefthand changed their solution. Please check with your HP representative for updates!

I recently had the opportunity to deliver a virtual infrastructure that uses HP Lefthand SAN solution. Setting up a Lefthand SAN is not that difficult, but there are some factors to take into consideration when planning and designing a Lefthand SAN properly. These are my lessons learned.

Lefthand, not the traditional Head-Shelf configuration

HP lefthand SANs are based on iSCSI and are formed by Storage nodes. In traditional storage architectures, a controller manages arrays of disk drives. A Lefthand SAN is composed of storage modules. A Network Storage Module 2120 G2 (NSM node) is basically an HP DL185 server with 12 SAS or SATA drivers running SAN/iQ software.

This architecture enables the aggregation of multiple storage nodes to create a storage cluster and this solves one of the toughest design questions when sizing a SAN. Instead of estimating growth and buying a storage array to “grow into”, you can add storage nodes to the cluster when needed. This concludes the sales pitch.

But this technique of aggregating separate NSM nodes into a cluster raises some questions. Questions such as;

- Where will the blocks of a single LUN be stored; all on one node, or across nodes?

- How are LUNs managed?

- How is datapath load managed?

- What is the impact of failure of a NSM node ?

Block placement and Replication level

The placement of blocks of a LUN depends on the configured replication level. Replication level is a feature called Network RAID Level. Network RAID stripes and mirrors multiple copies of data across a cluster of storage nodes. Up to four levels of synchronous replication at LUN level can be configured;

- None

- 2-way

- 3-way

- 4-way

Blocks will be stored on storage nodes according to the replication level. If a LUN is created with the default replication level of 2-way, two authoritative blocks are written at the same time to two different nodes. If a 3-way replication level is configured, blocks are stored on 3 nodes. 4-way = 4 nodes. (Replication cannot exceed the number of nodes in the cluster)

SAN IQ will always start to write the next block to the second node containing the previous block. A picture is worth a thousand words.

Node order

The data in which blocks are written to the LUN is determined not by node hostname but by the order in which the nodes are added to the cluster. The order of the placement of the nodes is extremely important if the SAN will span two locations. More information on this design issue later.

Virtual IP and VIP Load Balancing

When setting up a Lefthand Cluster, a Virtual IP (VIP) needs to be configured. A VIP is required for iSCSI load balancing and fault tolerance. One NSM node will act as the VIP for the cluster, if this node fails, the VIP function will automatically failover to another node in the cluster.

The VIP will function as the iSCSI portal, ESX servers use the VIP for discovery and to log in to the volumes. ESX servers can connect to volumes two ways. Using the VIP and using the VIP with the option load balancing (VIPLB) enabled. When enabling VIPLB on LUNs, the SAN/iQ software will balance connections to different nodes of the cluster.

Configure the ESX iscsi inititiator with the VIP as a destination address. The VIP will supply the ESX servers with a target address for each LUN. VIPLB will transfer initial communication to the gateway connection of the LUN. Running the vmkiscsi-util command shows the VIP as a portal and another ip address as target address of the LUN

root@esxhost00 vmfs]# vmkiscsi-util -i -t 58 -l

***************************************************************************

Cisco iSCSI Driver Version … 3.6.3 (27-Jun-2005 )

***************************************************************************

TARGET NAME : iqn.2003-10.com.lefthandnetworks:lefthandcluster:1542:volume3

TARGET ALIAS :

HOST NO : 0

BUS NO : 0

TARGET ID : 58

TARGET ADDRESS : 148.11.18.60:3260

SESSION STATUS : ESTABLISHED AT Fri Sep 11 14:51:13 2009

NUMBER OF PORTALS : 1

PORTAL ADDRESS 1 : 148.11.18.9:3260,1

SESSION ID : ISID 00023d000001 TSIH 3b1

Gateway Connection

This target address is what Lefthand calls a gateway connection. The gateway connection is described in the Lefthand SAN User Manual (page 561) as follows;

Use iSCSI load balancing to improve iSCSI performance and scalability by distributing iSCSI sessions for different volumes evenly across storage nodes in a cluster. ISCSI load balancing uses iSCSI Login-Redirect. Only initiators that support Login-Redirect should be used. When using VIP and load balancing, one iSCSI session acts as the gateway session. All I/O goes through this iSCSI session. You can determine which iSCSI session is the gateway by selecting the cluster, then clicking the iSCSI Sessions tab. The Gateway Connection column displays the IP address of the storage node hosting the load balancing iSCSI session.

SAN/IQ will designate a node to act as a gateway connection for the LUN, the VIP will send the IP address of this node as a target address to all the ESX hosts. This means every host that uses the LUN will connect to that specific node and this storage node will handle all IO for this LUN.

This leads to the question, how will the GC handle IO for blocks not locally stored on that node? When a block is requested that is stored on another node, the GC will fetch this block. All nodes are aware of which block is stored on which node. The GC node will fetch this block of one of the nodes it’s stored and will send the results back to the ESX host.

Gateway Connection failover

Most Clusters will host more LUNs than it has available nodes. This means that each node will host the gateway connection role of multiple LUNs. If a node fails, the GC role will be transferred to the other nodes in the cluster. But when a NSM node returns back online, the VIP will not failback the GC roles. This will create an unbalance it the cluster, which needs to be solved as quickly as possible. This can be done by issuing the RebalanceVIP for the volume from the cli.

Ken Cline asked me the question:

How do I know when I need to use this command? Is there a status indicator to tell me?

Well actually there isn’t and that is exactly the problem!

After a node failure, you need to be aware of this behavior and you will have to rebalance a volume yourself by running the RebalanceVIP command. The Lefthand CMC does not offer this option or some sort of alert.

Network Interface Bonds

How about the available bandwidth? Lefthand nodes come standard with two 1GB NICs. The two NICs can be placed in a bond. An NSM node has three NIC bond configurations;

- Active – Passive

- Link Aggregation (802.3 ad)

- Adaptive Load Balancing

The most interesting is the Adaptive Load Balancing (ALB). Adaptive Load Balancing combines the benefits of the increased bandwidth of 802.3ad with the network redundancy of Active-Passive. Both NICS are made active and they can be connected to different switches, no additional configuration on physical switch level is needed.

When an ALB bond is configured, it creates an interface. This interface balances traffic through both NICs. But how will this work with the iSCSI protocol? In RFC 3270 (http://www.ietf.org/rfc/rfc3720.txt) iSCSI uses command connection allegiance;

For any iSCSI request issued over a TCP connection, the corresponding response and/or other related PDU(s) MUST be sent over the same connection. We call this “connection allegiance”.

This means that the NSM node must use the same MAC address to send the IO back. How will this affect the bandwidth? As stated in the ISCSI SAN configuration guide; “ESX Server‐based iSCSI initiators establish only one connection to each target.”.

It looks like ESX will communicate with the gateway connection of the LUN with only NIC. I asked Calvin Zito (http://twitter.com/HPstorageGuy) to educate me on ALB and how it handles connection allegiance.

When you create a bond on an NSM, the bond becomes the ‘interface’ and the MAC address of one of the NICs becomes the MAC address for the bond. The individual NICs become ‘slaves’ at that point. I/O will be sent to and from the ‘interface’ which is the bond and the bonding logic figures out how to manage the 2 slaves behind the scenes. So with ALB, for transmitting packets, it will use both NICs or slaves, but they will be associated with the MAC of the bond interface, not the slave device.

The bond uses the same IP and MAC address of the first onboard NIC. This means the node will use both interfaces to transmit data, but only one to receive.

Chad Sakac (EMC), Andy Banta (VMware) and various other folks has written a multivendor post explaining how ESX and vSphere handles iSCSI traffic. A must-read!

http://virtualgeek.typepad.com/virtual_geek/2009/01/a-multivendor-post-to-help-our-mutual-iscsi-customers-using-vmware.html#more

Design issues;

When designing a Lefthand SAN, these points are worth considering;

Network RAID level Write performance

When 2-way replication is selected, blocks will be written on two nodes simultaneously, if a LUN is configured with 3-way replication, then blocks must be replicated to three nodes . Acknowledgements are given when blocks are written in cache on all the participating nodes. When selecting the replication level, keep in mind that higher protection levels leads to less write performance.

Raid Levels NSM

Network RAID offers protection for storage node failure, but it does not protect against disk failure within a storage node. Disk RAID levels need to be configured at Storage Node level, unlike most traditional arrays where raid level can be configured per LUN level. It is possible to mix storage nodes with different configurations of RAID within a cluster, but the this can lead a lower useable capacity.

For example, the cluster exists of 12 TB nodes running RAID 10. Each node will provide 6TB in usable storage. When adding two 12TB nodes running RAID 5, each provides 10 TB of usable storage. However, due to the restrictions of how the cluster uses capacity, the NSM nodes running RAID 5 will still be limited to 6 TB per storage node. This restriction is because the cluster operates at the smallest usable per-storage node capacity.

The RAID level of the storage node must first be set before it can join a Cluster. Check RAID level of clusternodes before configuring the new node, because you cannot change the RAID configuration without deleting data.

Combining Replication Levels with RAID levels

RAID levels will ensure data redundancy inside the storage node, while Replication levels will ensure data redundancy on the storage node level. Both higher RAID levels and Replication levels offer greater data redundancy, but will have an impact on capacity and performance. RAID5 with 2-way replication seems to be the sweet spot for most implementations, but when high available data protection is needed, Lefthand recommends 3-way replication with raid 5, ensuring triple mirroring with 3 parity blocks available.

I would not suggest RAID 0 with replication, because rebuilding a RAID set will always be quicker than copying an entire storage node over the network.

Node placement

Mentioned previously, the data in which blocks are written to the LUN is determined by the order in which the nodes are added to the cluster. When using 2-way replication, blocks are written to two consecutive nodes. When designing a cluster the order of the placement of the nodes is extremely important if the SAN will be placed in two separate racks or even better span two locations.

Because the 2-way replication writes blocks on two consecutive nodes, adding the storage nodes to the cluster in alternating order will ensure that data is written to each rack or site.

When nodes are added in the incorrect order or if a node is replaced, the general setting tab of the cluster properties allows you to “promote” or “demote” a storage node in the logical order. This list is the leading for the “write” order of the nodes.

Management Group and Managers

In addition to setting up data replication, it is important to setup managers. Managers play an important role in controlling data flow and access of clusters. Managers run inside a management group. Several storage nodes must be designated to run the manager’s service. Because managers use a voting algorithm, a majority of managers needs to be active to function. This majority is called a Quorum. If a quorum is lost, access to data is lost. Be aware that access to data is lost, not the data itself.

An odd number of managers is recommended, as a (low) even a number of managers can get in a certain state where no majority is determined. The maximum number of managers is 5.

Failover manager

A failover manager is a special edition of a manager. Instead of running on a storage node, the failover manager runs as a virtual appliance. A failover manager only function is maintaining quorum. When designing a SAN spanning two sites, running a failover manager is recommended. The optimum placement of the failover manager is the third site. Place an even amount of managers in both sites and run the failover manager at an independent site.

If a third site is not available, run the failover manager local on a server, creating a logical separated site

Volumenames

And the last design issue, volume names cannot be changed. The volume name is the only setting that can’t be edited after creation. Plan your naming convention carefully, otherwise, you will end up recreating volumes and restoring data. If someone of HP is reading this, please change this behavior!

Get notification of these blogs postings and more DRS and Storage DRS information by following me on Twitter: @frankdenneman

HPE Insight Remote Support software delivers secure remote support for your HPE servers, storage, network, and SAN environments, which includes the P4000 SAN.

Note: HPE StoreVirtual Storage is the new name for HPE LeftHand Storage and HPE P4000 SAN solutions. LeftHand Operating System (LeftHand OS) is the new name for SAN/iQ.

Important: Each P4000 Storage Node counts as 30 monitored devices within Insight RS.

Important: For every 100 P4000 devices being monitored by Insight RS, add 1 GB of free disk space to the Hosting Device requirements.

P4000 documentation is available at: www.hpe.com/support/hpesc. Search for LeftHand P4000 SAN Solutions.

To configure your P4000 Storage Systems to be monitored by Insight RS, complete the following sections:

Install and configure communication software on storage systemsTo configure your monitored devices, complete the following sections:

You need to do the complete install of Centralized Management Console (CMC) to install the SNMP MIBs. If you do not modify the SNMP defaults that CMC uses, then SNMP should work with Insight Remote Support without modification.

Insight RS supports CMC 9.0 and higher (CMC 11.0 is recommended).

CMC 11.0 can be used to manage LeftHand OS 9.5, 10.x, 11.0, and 12.5 storage nodes.

CMC, Windows version has the following requirements:

| Memory size | Free disk space |

|---|---|

| 100 MB RAM during run-time | 150 MB disk space for complete install |

Note: CMC can be installed on the Hosting Device or it can be install on a separate system. If CMC is already installed on another system, it does not need to be installed on the Hosting Device.

Install the centralized management console (CMC) on the computer that you will use to administer the SAN. You need administrator privileges while installing the CMC.

Insert the P4000 Management SW DVD in the DVD drive. The installer should launch automatically. Or, navigate to the executable (

:GUIWindowsDisk1InstDataVMCMC_Installer.exe)Or download CMC at: h20392.www2.hpe.com/portal/swdepot/displayProductInfo.do?productNumber=StoreVirtualSW.

Click the Complete install option, which is recommended for users that use SNMP.

Note: If you already have Service Console installed, you don't need to disable it. Insight Remote Support can co-exist with Service Console without conflict.

Use the Find Nodes wizard to discover the storage systems on the network, using either IP addresses or host names, or by using the subnet and gateway mask of the storage network.

The found storage systems appear in the available category in the CMC.

Note: LeftHand Operating System (LeftHand OS) is the new name for SAN/iQ.

Use CMC to upgrade to LeftHand OS 9.5 or higher (LeftHand OS 11.0 is recommended) on the P4000 Storage Systems. If your P4000 Storage Systems already has LeftHand OS 9.5 installed, you do not need to perform this procedure.

Note: For LeftHand OS 9.5 devices, Patch Set 05 is required for all devices.

CMC version 9.0 and higher provide the option to download LeftHand OS upgrades and patches from HPE. Once the current upgrades and patches are downloaded from HPE to the CMC system, CMC can be used to update Management Groups or nodes with these changes.

When you upgrade the LeftHand OS software on a storage node, the version number changes. Check the current software version by selecting a storage node in the navigation window and viewing the Details tab window.

Note: Directly upgrading from LeftHand OS 9.5 to LeftHand OS 12.5 is not supported.

Important: When installing/upgrading LeftHand OS, do not modify the default SNMP settings. The default settings are used by Insight Remote Support, and communication between the P4000 Storage System and the Hosting Device will not function properly if the SNMP settings are modified. SNMP is enabled by default in LeftHand OS. In CMC 9.0 and higher, SNMP traps are modified at the Management Group level, not at the node level.

Understand best practices

LSMD upgrade — LSMD upgrade is required for upgrading from LeftHand OS 7.x.

Virtual IP addresses — If a Virtual IP (VIP) address is assigned to a storage node in a cluster, the VIP storage node needs to be upgraded last. The VIP storage node appears on the cluster iSCSI tab, see figure 'Find the Storage Node Running the VIP'.

- Upgrade the non-VIP storage nodes that are running managers, one at a time.

- Upgrade the non-VIP non-manager storage nodes.

Upgrade the VIP storage node.

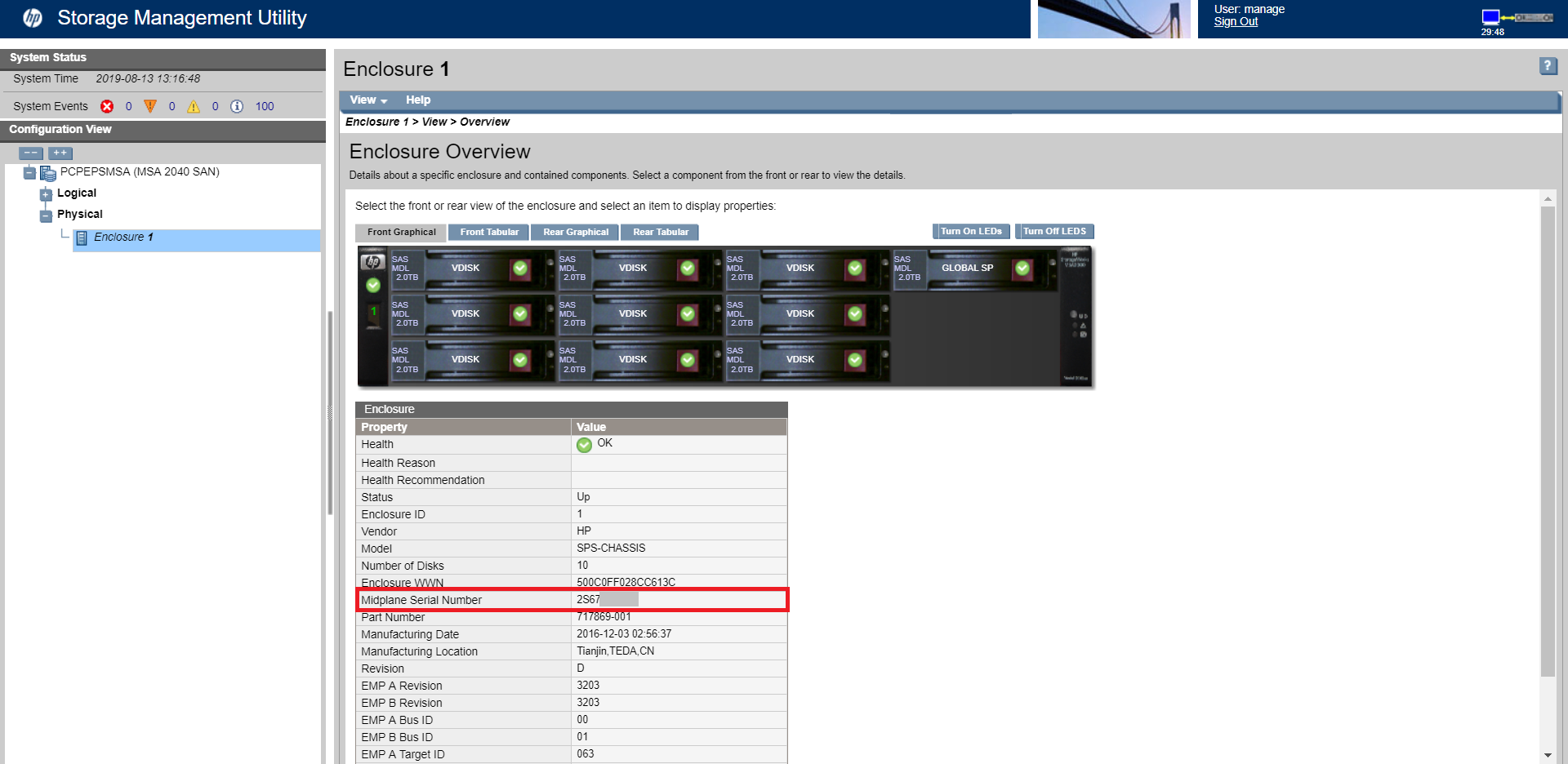

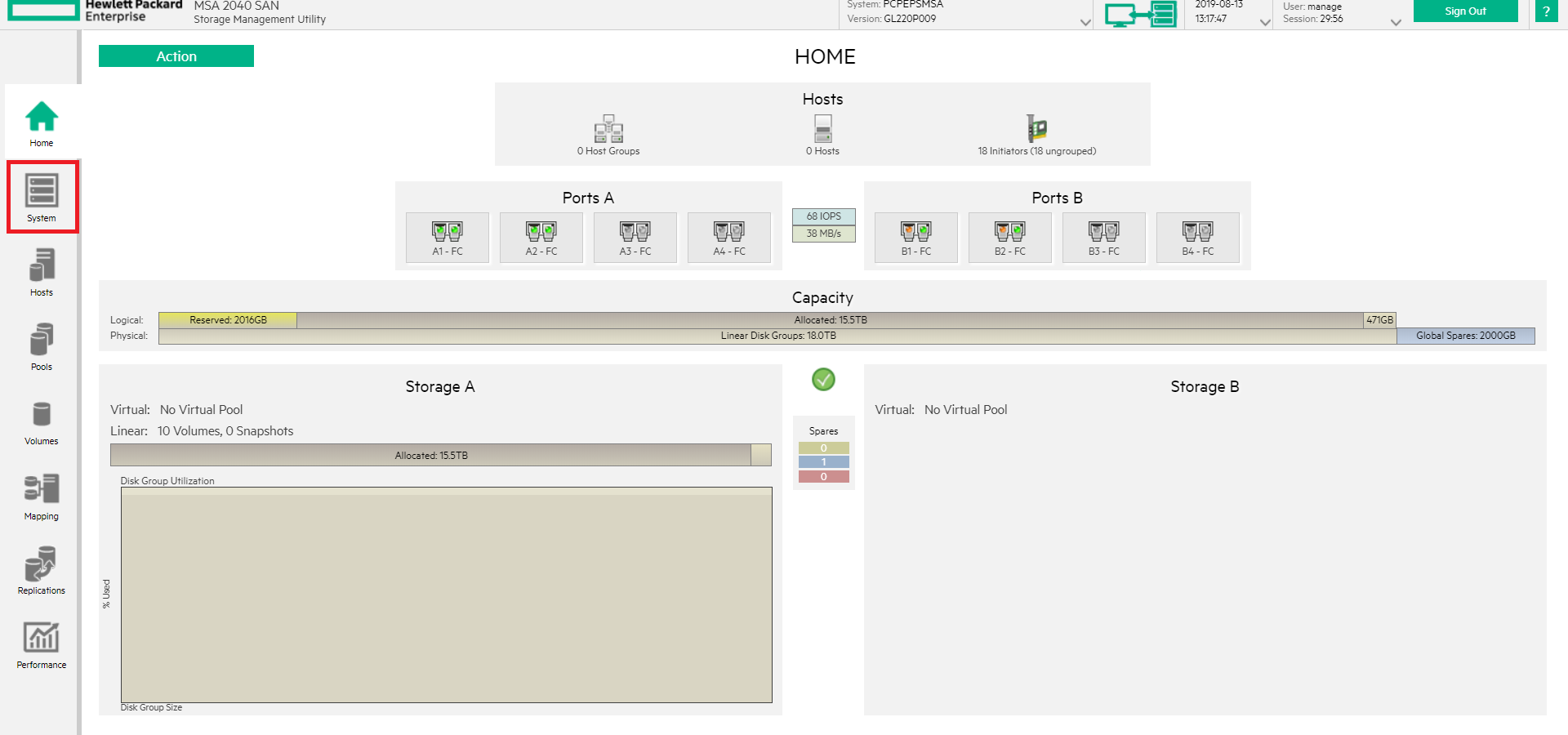

Find the Storage Node Running the VIP

Remote Copy — If you are upgrading management groups with Remote Copy associations, you should upgrade the remote management groups first. If you upgrade the primary group first, Remote Copy may stop working temporarily, until both the primary management group and the remote group have finished upgrading. Upgrade the primary site immediately after upgrading the remote site. Refer to 'How to Verify Management Group Version'.

Select the type of upgrade

CMC supports two methods of upgrades, as shown in figure 'Viewing the CMC Upgrade/Installation Window'.

One-at-a-time (recommended) - this is the default and only method that should be used if the storage nodes exist in a management group.

Simultaneous (advanced) - this allows you to upgrade multiple storage nodes at the same time if they are not in a management group. Use this only for storage nodes in the Available pool.

Caution: Do not select 'Simultaneous (advanced)' if your storage nodes are in a production cluster.

Viewing the CMC upgrade/installation window

Increase the size of the OS disk on the VSAs

Due to changes in the size of the VMware tools that get installed during software upgrades from pre-8.5 versions of LeftHand OS, you must increase the size of the OS disk before upgrading the VSA. Additional space requirements are necessary for future software releases, as well. Therefore, we recommend increasing the size of the OS disk to accommodate both requirements at this time.

Lefthand San Serial Number Generator

Note: These instructions apply to VMware ESX Server. Other VMware products have similar instructions for extending a virtual disk. Please consult the appropriate VMware documentation for the product you are using.

To increase the OS disk size on the VSA, complete the following steps:

Using the CMC, power off the VSA.

Open the VI Client and select VSA → Edit Settings → Hardware.

Select Hard disk 1 (verify that the Virtual Device Node is SCSI (0:0)).

Under Disk provisioning, changed the provisioned size to 8 GB.

Click OK.

Repeat these steps for Hard disk 2 (verify the Virtual Device Node is SCSI (0:1))

Using the VI Client, power on the VSA.

Find the VSA in the CMC and apply the upgrade.

Verify management group version

When upgrading from version LeftHand OS 7.x to release 9.5 or higher, the management group version will not move to the new release version until all storage nodes in the management group (and in the remote management group if a Remote Copy relationship exists) are upgraded to release LeftHand OS 9.5 or higher.

When upgrading from LeftHand OS version 7.x to release 9.5 or higher, the upgrade process validates the hardware identity of all of the storage nodes in the management group. If this validation fails for any reason, the management group version will not be upgraded to 9.5 or higher. For example, if a management group has a mix of platforms, some of which are unsupported by a software release; then only the supported platforms get upgraded successfully, the management group version will not be upgraded if the unsupported platforms remain in that management group.

To verify the management group version, complete the following steps:

- In the CMC navigation window, select the management group, and then select the Registration tab. The management group version number is at the top of the Registration Information section, as shown in figure Verifying the management group version number.

Verifying the management group version number

Serial Number Lookup

Check for patches

After you have upgraded LeftHand OS, use CMC to check for applicable patches required for your storage node. CMC 9.0 and higher shows available patches and can be used to download them from HPE to the CMC system.

Use the following procedure to verify your SNMP settings. If you did not modify the LeftHand OS SNMP settings when you installed/upgraded LeftHand OS then you should not need to make any updates during the following procedure.

With LeftHand OS, you can configure different severity Alert levels to send from the Management Group. Under Insight Remote Support, configure each Management Group to send v1 SNMP traps of Critical and Warning levels in Standard message text length.

LeftHand OS 9.5 and higher Management Groups configure the Hosting Device SNMP trap host destination once at the Management Group level. Even though you only configure SNMP once at the Management Group level under CMC 10.0 and higher, you need to configure SNMP on the Hosting Device to allow traps from all LeftHand OS nodes.

Note: For LeftHand OS Management Groups, users can configure the Hosting Device trap host destination P4000 CLI createSNMPTrapTarget command instead of using CMC. The getGroupInfo command will show the current SNMP settings and SNMP trap host destinations configured at the Management Group level.

Note: If using the P4000 CLI (CLIQ) createSNMPTrapTarget or getGroupInfo commands, the path environment variable is not updated when the P4000 CLI is installed with Insight RS. You will need to use the full path for the P4000 CLI, which is [InsightRS_Installation_Folder]P4000.

To verify and/or update your SNMP settings, complete the following steps:

Open CMC.

Verify that SNMP is enabled for each storage system:

Note: LeftHand OS ships by default with SNMP enabled for all storage systems and configured with the 'Default' Access Control list.

Note: LeftHand OS Management Groups configure the Hosting Device SNMP trap host destination once at the Management Group level. With LeftHand OS, you can configure different severity Alert levels to send from the Management Group. Under Insight Remote Support, configure each Management Group to send v1 SNMP traps of

CriticalandWarninglevels inStandardmessage text length.Select SNMP in the left menu tree and open the SNMP General tab.

Verify that the Agent Status is enabled.

Verify that the P4000 Storage System SNMP Community String is set to

publicor the same value configured in the Insight RS Console for SNMP discovery.In the Access Control field, verify that either

Defaultis listed or the Hosting Device host IP address is listed. TheDefaultoption configures SNMP to be accessed by thepubliccommunity string for all IP addresses.Note: The SNMP settings on the P4000 Storage Systems need to match the SNMP settings on the Hosting Device.

Select Alerts in the left menu tree, and verify that alerts are configured with the 'trap' option for each storage system.

Note: LeftHand OS ships by default with traps set for all alert cases.

Add the Hosting Device IP address to the P4000 Storage System's SNMP trap send list. The Hosting Device IP address is needed to configure SNMP traps on each storage system. Note that in CMC 9.0 and higher, SNMP traps are configured at the Management Group level, not at the node level.

Select SNMP in the left menu tree and open the SNMP Traps tab.

Open the Edit SNMP Traps dialog by browsing to SNMP Trap Tasks → Edit SNMP Traps.

In the Edit SNMP Traps dialog, click Add.

In the Add IP or Hostname dialog, type the IP address or host name into the IP or Hostname field. Verify that the Trap Version is

v1, and click OK.

- Repeat steps 3 to 4 for each P4000 Storage System. Alternatively, you could also configure one node using steps 3 to 4, then use the CMC copy node configuration option to copy the configuration to all other nodes.

Add an additional CMC user with read-only credentials. This is recommended if you don't want to have the Hosting Device system administrator to have create/delete control of the storage systems.

Select Administration in the left menu tree.

Create a user group with read-only access, if one does not already exist. Browse to Administrative Tasks → New Group.

Create a new user. First select the group you created in the previous step, then go to Administrative Tasks → New User. Type the User Name, Password, and click Add to add this user to the read-only user group you created.

| Protocol | Ports | Source | Destination | Function | Optional |

|---|---|---|---|---|---|

| ICMP | N/A | Hosting Device | Monitored Systems | Provides system reachability (ping) check during system discovery and before other operations. | Required |

| TCP | 5989 | CMC (can be running on Hosting Device) | Monitored Systems | P4000 Centralized Management Console (CMC). | Required |

| TCP | 5989 | Hosting Device | Monitored Systems | Remote Support P4000 Integration Module - P4000 CLI API. | Required |

| TCP | 7905 | Monitored Systems | Hosting Device | Secure HTTP (HTTPS) port used by the listener running in the Director's Web Interface. The monitored host connects to the Hosting Device on this port (e.g. https://<hostname>:7905). | Required |

| UDP | 161 | Hosting Device | Monitored Systems | SNMP. This is the standard port used by SNMP agents on monitored systems. The Hosting Device sends requests to devices on this port. | Required |

| UDP | 162 | Monitored Systems | Hosting Device | SNMP Trap. This is the port used by Insight RS to listen to SNMP traps. | Required |

To discover your monitored devices, complete the following sections:

Add P4000 SAN Solution (SAN/iQ) protocol to the Insight RS ConsoleTo add the P4000 SAN Solution (SAN/iQ) protocol, complete the following steps:

- In a web browser, log on to the Insight RS Console (https://<hosting_device_ip_or_fqdn>:7906.)

Create a Named Credential for every set of Management Group credentials:

- In the Insight RS Console, navigate to Company Information → Named Credentials.

- Click Add New Credential.

- Type a Credential Name.

- In the Protocol drop-down list, select P4000 SAN Solution (SAN/iQ).

- Type the Username and Password for the Management Group created in Configuring SNMP on the P4000 Storage System. These credentials can be either full LeftHand OS management group or read-only LeftHand OS management group credentials.

- Click Save.

Add Discovery credentials for the P4000 devices:

- In the Insight RS Console, navigate to Discovery → Credentials.

Create discovery protocol credentials for each Named Credential created earlier.

- In the Select and Configure Protocol drop-down list, select P4000 SAN Solution (SAN/iQ).

- Click New.

- In the Named Credential drop-down list, select the Named Credential created earlier.

- Click Add.

To discover the device from the Insight RS Console, complete the following steps:

- In a web browser, log on to the Insight RS Console.

- In the main menu, select Discovery and click the Sources tab.

Expand the IP Addresses section and add the IP address for your device:

- Click New.

- Select the Single Address, Address Range, or Address List option.

Type the IP address(es) of the devices to be discovered.

Note: When discovering new P4000 devices, do not include any P4000 Management Group Virtual IP (VIP) addresses. VIP addresses are created when you create and configure P4000 clusters. Instead, discover P4000 devices using individual IP addresses, or create IP discovery ranges excluding all P4000 VIP addresses.

If you do discover a P4000 VIP address, you should delete the managed entity for the VIP address from Insight Remote Support Advanced before running any collections or capturing any test traps. After deleting the VIP address entity, re-discover the node using the actual IP address for the P4000 device.

- Click Add.

Click Start Discovery.

Note: Insight RS filters out iLO 4 but not iLO 2 or iLO 3 devices. If an iLO 2 or iLO 3 device is discovered, manually deleted them on the Device Details screen.

To verify the P4000 was discovered correctly, complete the following steps:

Lefthand San Serial Numbers

Verify that the appropriate protocols have been assigned to the P4000 device:

- In the Insight RS Console, navigate to Devices and click the P4000 Device Name.

- On the Credentials tab, verify that verify that the P4000 SAN Solution (SAN/iQ) and SNMPv1 protocols have been assigned to the device.

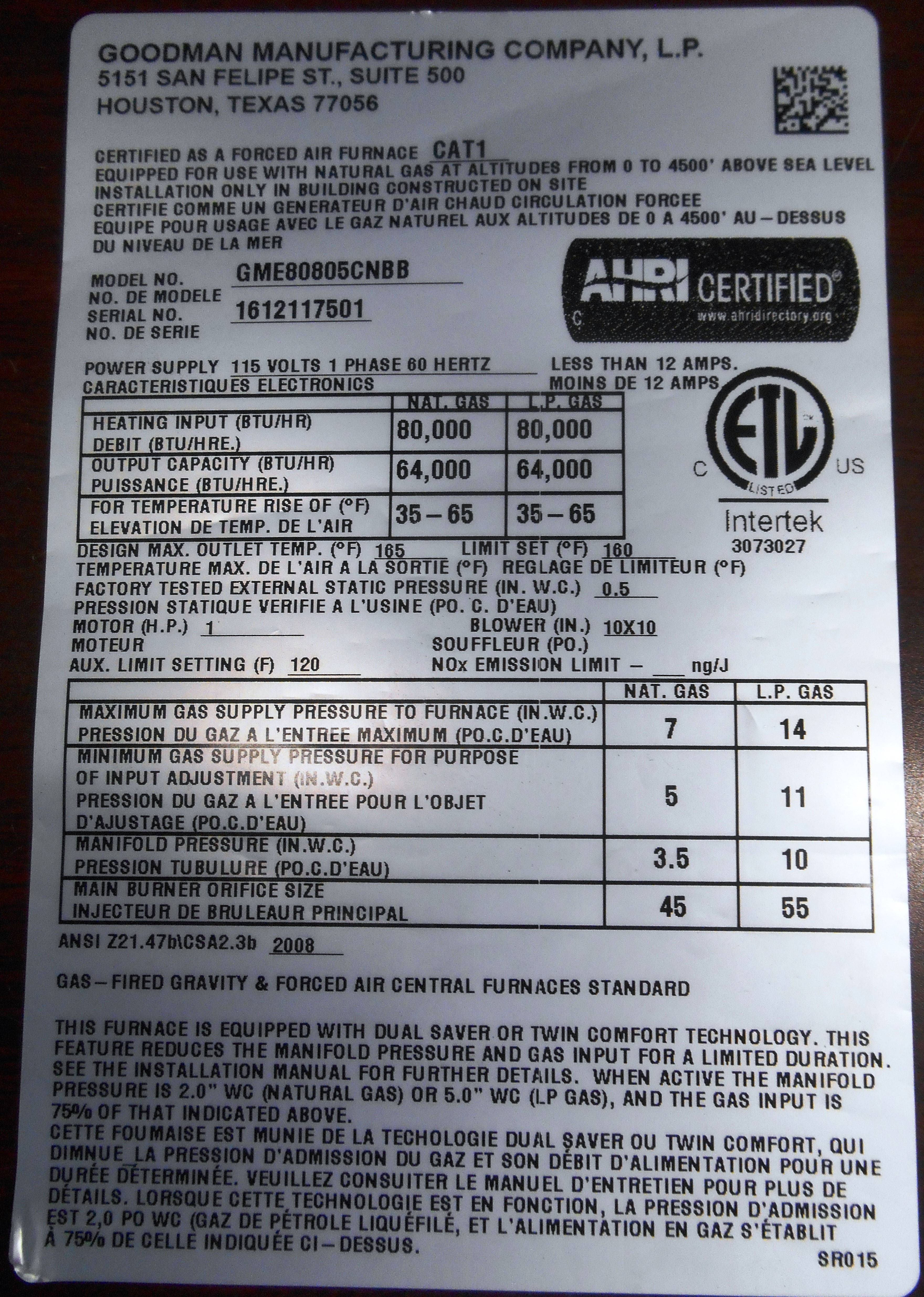

To add the P4000 device's warranty and contract information to Insight RS, complete the following steps:

- In the Insight RS Console, navigate to Devices and click the P4000 Device Name.

- Expand the Hardware section, and type the Serial Number in the Override Serial Number field and the Product Number in the Override Product Number field.

- Click Save Changes.

To verify the device was discovered correctly, complete the following steps:

- In a web browser, log on to the Insight RS Console.

- In the main menu, select Devices and click the Device Summary tab.

Make sure the Status column contains a success icon ().

If there is no success icon, see Resolve monitored device status issues for troubleshooting information.

To verify communication between your monitored device and Insight RS, complete the following sections:

Send a test eventTo verify that the P4000 Storage System(s) are communicating with the Hosting Device, open CMC and select at least one storage node and send a test trap to the Hosting Device. Select SNMP in the left menu tree and open the SNMP Traps tab. In the SNMP Trap Tasks drop-down list, select Send Test Trap.

Note: In CMC, SNMP traps are configured at the Management Group level, not at the node level.

Go to Hosting Device configurations to verify that the test event was posted to the Hosting Device logs. The CMC read-only credentials were verified during device discovery when each LeftHand OS device was added to Hosting Device monitored listed.

Verify collections in the Insight RS ConsoleCollections are not automatically run after discovery. HPE recommends that you manually run a collection after discovery completes in order to verify connectivity. Run the collection schedule manually on the Collection Services → Collection Schedules tab.

Verify that this collection ran successfully on the Collection Services → Basic Collection Results tab in the Insight RS Console.

- Log on to the Insight RS Console.

- In the main menu, select Collection Services and then click the Basic Collection Results tab.

- Expand the P4000 Family Configuration Collection section.

- Locate the entry for your device and check the Result column. If the collection was successful, a success icon appears (). If it failed, an error icon appears ().

Kumpulan Serial Number Idm

_HP_HTML5_bannerTitle.htm